There’s a curious misconception floating around, whispered by skeptics, shouted by cynics: that letting students use AI in their coursework flattens the learning curve. That it replaces thinking. That it reduces education to a copy-paste exercise. If everyone has the same tool, the logic goes, outcomes must converge. But that’s not what happens. Not even close.

In three separate university classes, I removed all restrictions on AI use. Not only were students allowed to use large language models, they were taught how to use them well. Context input, prompts, revision loops, scaffolding, argument development; the full toolbox. Each group has a customized AI assistant tailored to the course content. Everyone had the same access. Everyone knows the rules. And yet the difference in what they produced is quite large.

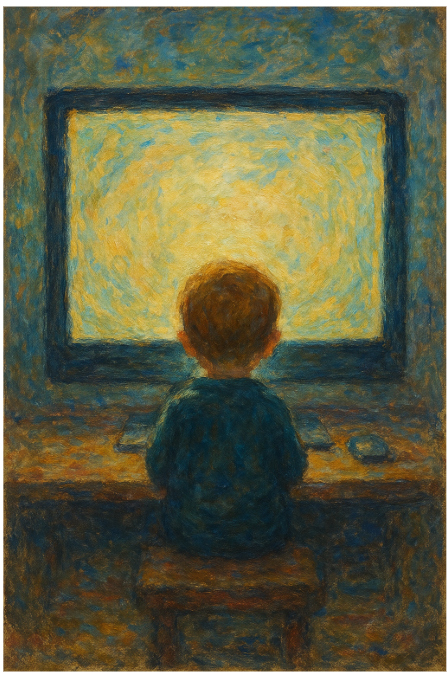

Some students barely improved. Others soared. The quality of work diverged wildly, not only in polish but in depth, originality, and complexity. It didn’t take long to see what was happening. The AI was a mirror, not a mask. It didn’t hide student ability and effort; it amplified both. Whatever a student brought to the interaction, curiosity, discipline, intellectual courage, determined how far they could go.

This isn’t hypothetical. It’s empirical. I grade every week. I see the evidence.

When given a routine assignment, generative AI can do a decent job. A vanilla college essay? Sure, it’ll pass. But I don’t assign vanilla. One of my standard assignments asks undergraduates to write a paper worthy of publication. Not in a class blog or a campus magazine; a real, peer-reviewed publication.

You might think that’s too much to ask. And yes, if the bar is “can a chatbot imitate academic tone and throw citations at a thesis,” then yes, AI can fake it. But a publishable paper requires more than tone. It requires original framing, precise argumentation, contextual awareness, and methodological discipline. No prompt can do all that. It requires a human mind, inexperienced, perhaps, but willing to stretch.

And stretch they do. Some of these students, undergrads and grads, manage to channel the AI into something greater than the sum of its parts. They write drafts with the machine, then rewrite against it. They argue with the chatbot, they question its logic, they override its flattening instincts. They edit not for grammar but for clarity of thought. AI is their writing partner, not their ghostwriter.

This is where it gets interesting. The assumption that AI automates thinking misses the point. In education, AI reveals the higher order thinking. When you push students to do something AI can’t do alone (create, synthesize, critique), then the gaps between them start to matter. And those gaps are good. They are evidence of growth.

Variance in output isn’t a failure of the method. It’s the metric of its success. If human participation did not matter, there would not be variance in output. In complex tasks, human input is critical, and that's the only explanation for this large variance.

And in that environment, the signal is clear: where there is variance in performance, there is possibility of growth. And where there is growth, there is plenty of room for teaching and learning.

Prove me wrong.